Collapsar77

Member-

Posts

9 -

Joined

-

Last visited

-

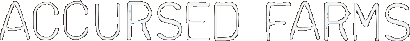

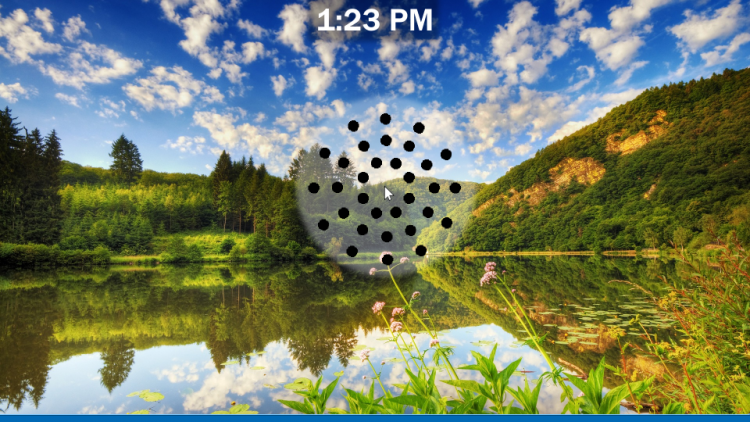

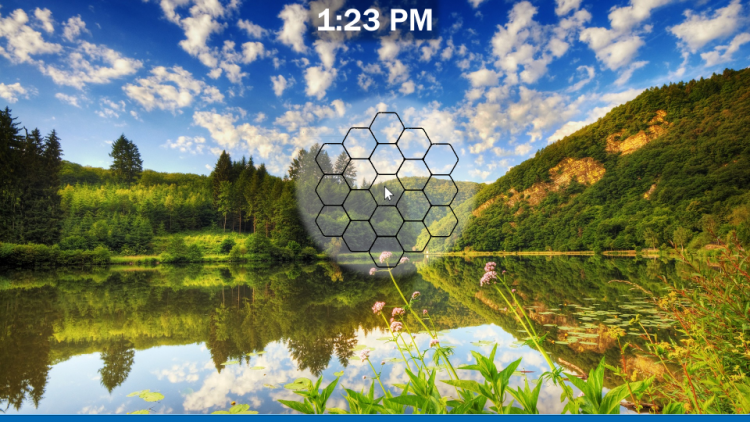

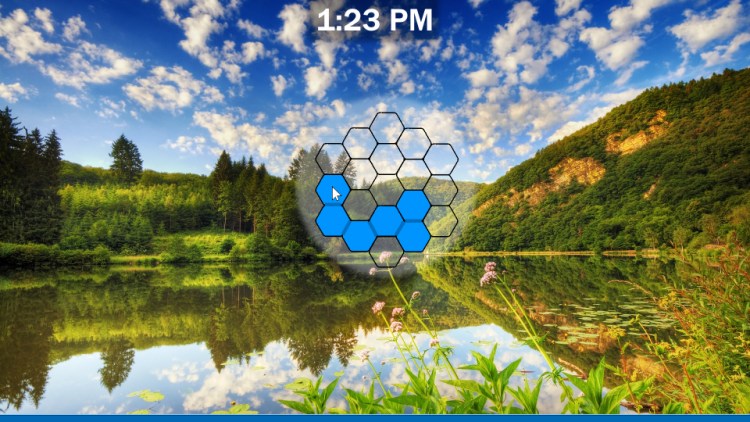

In the spirit of brainstorming-- a couple of thoughts I've had in the interim. I'd mentioned before the idea of bringing up a phone-password style grid to formalize the gestures. I think you could maybe combine that with the weapon-wheel concept to make something like is pictured below. Call this a first draft. I call it a "Constellation Wheel". As is, it has the power of gestures but without the uncertainty about what you actually wanted to invoke which can cause gestures to misfire. It does require more precise aiming than the weapon wheel, which is a problem. It also doesn't really tell you what you're invoking, you kind of have to just know. One way you can help the precise aiming problem is by tiling regular polygons like hexagons instead. I have a couple of pictures of what this "Hexagesture" layout would look like. Its ADVANTAGE over the weapon-wheel is that it can invoke a stupidly-large number of things quickly. The weapon wheel example is amazing but I'm still not sure how well it scales once you're choosing between more than a dozen items. Granted, there are ways to sub-categorize-- programs, programs beginning with numbers 1-9, programs beginning with A-E, etc. Heck, I can even somewhat imagine algorithmically adjusting categorizations based on how much is installed where-- like if you install a BUNCH of programs starting with A, maybe it goes from "programs A-E" to "programs A" and "programs B-E". You get the point. But somewhere in there the inherent complexity of the maze of sequential gestures you're navigating is maybe gonna become a problem. I think the weapon wheel will be fastest for commonly used items and actions but will struggle at picking specific items out of large sets. The "constellation gestures" or "hexigestures" concept will, conversely, not be as good as the weapon wheel for common items but would excel at picking specific items out of large sets and might be considered as a way to supplement it. It is, however, less intuitive. It's also potentially targeting a small subset of items-- ones I need/want to invoke quickly, and use often enough to remember a gesture for, but have to pick out of a large set. I think it would be best for things like programs. It could use further tweaking or UI improvements to help deal with the cognitive burden of use-- that is, the fact you have to memorize the gestures, in the current form. Other solutions would be better for sorting pictures or text files out of large sets. One other thing to note-- it's occurred to me that you can shrink the sets of things you search, and make searches more efficient, by constraining searches to things that actually exist on a system. That may make sub-searches within the weapon wheel more doable? So, EG, I have 13 programs that start with A but only 11 unique second-letters (EG-- aC, aD, aL). Not really a new idea, per se. The Win 10 search honestly already makes use of this. Within typing a few letters it shows you only the things that match them on the system. Unfortunately it also automatically searches the web if it can't find the thing or you mis-spelled it, which I hate. I'd love to be able to constrain it to only let me search things it thinks exist. I never asked, Ross-- you mentioned that you often find yourself trying to locate a program but not knowing the name. I'd sort of toyed with date-ranges as a possible filter for getting at that kind of information, but I never explicitly asked-- what kind of info DO you usually know about programs you can't recall the names of?

-

That rocks! That is so good. I actually like how this feels a lot better than I thought I would. There's only one thing that would SLIGHTLY improve it, but whether it makes sense to implement depends on the longer-term use plans. I do find myself overshooting the icons and that slows me down a bit. I wonder if it's possible to highlight an icon based on the position of the mouse relative to the center of the wheel rather than based on whether I'm actually over an icon? EG, if I overshoot the top icon but am still in the triangle of space above it, I select it? Of course, if you plan eventually to have several tiers of icons stacked on top of one another, that isn't such a good idea.

-

Well--- okay, switching gears to aesthetics, have you seen some of the custom desktops people put together for themselves using RainMeter as a basis? Genuinely have no idea what your aesthetic preferences are exactly, but it can serve a pretty wide range of tastes. You might already be using it, to be fair, you've got a CPU, RAM and drive-space monitors and it's kinda the go-to for those things in my mind. I've been playing around with it a bit on this Win 10 system myself, and I'm kinda into it so far. Can't recall if someone else in here mentioned it or I just remembered it from the last time I worked seriously at modding my desktop GUI. But as some of the examples on DeviantArt show, you can REALLY push it, just make the computer a feast for the eyes. ... the, um, downside is that if you want to customize anything in a way that the creator of the skin didn't explicitly design it to be customized, you need to jump into a config file that is all-but-code. OTOH, that's just for the setup. It's very much a GUI after that. Also bear in mind that even I, an almost complete code neophyte, who can't stand to use the console, can actually puzzle out RainMeter script. Mentioning it if hasn't been mentioned, amplifying it if it has. I don't want to re-read the whole forum to figure it out.

-

Ugh. Awful. Not surprising, but awful. Okay, I take back what I said about this just being an interesting thought experiment. F--- those guys. How do we make literally every aspect of the UI custom?

-

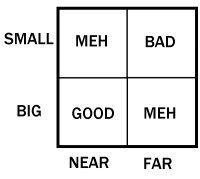

...Hilariously I think you just accidentally strengthened Ross's argument while trying to explain why you don't like it. That's basically a diagrammatic representation of the relationship of the variables in the portion of the equation that's inside the log in Fitts's law. More or less *by definition*, regardless of whether having something be small and near or far and big is roughly equivalently inefficient, you're kinda inadvertently pointing up the fact that, well, neither one is as good as having things be big and near? So, in fact, Ross's seemingly contradictory statements can also both mutually be read as true to some degree, since both approaches he's complaining about are sub-optimal, again, by definition? I'm not sure the best take-away from that realization is frustration that Ross doesn't know what he wants. He doesn't know *specifically* what he wants, I don't think he's really even saying that he does, but in general we can guess that he wants targets that are bigger and nearer, so to speak. I feel like it's a bit much to go after him for throwing out ideas that are at cross purposes, given that his thesis was essentially: "I know this is inefficient, and I need help figuring out what a more efficient GUI would look like". It's sort of like complaining that ideas in a brainstorming session don't all fit together. Pretty sure that if he knew what he wanted exactly, he wouldn't ask. I admit to being initially confused about what design parameters he had in mind, but I think the common thread that's emerged in this discussion is basically: A) it needs to be optimized to be efficient, and B) it needs to be friendly to mouse users, not be all about the keyboard. Lots of stuff can fall under that umbrella. And while you can certainly argue that the windows GUI is maybe a little better put together than he gives it credit for being, there's something to be said for getting into an innovator's mindset and asking how we could rethink things. I think this board has already turned up a couple of interesting things I hadn't heard of, and I hope it will continue to in the next few days. Sometimes it's okay to sit with uncertainty for a little while. Not everything needs a clear direction right away, and pushing for one can stop all the necessary pieces of the puzzle from emerging. Hey, I struggle with it too, I won't front. Just saying, though. Incidentally, so what if Ross *does* have a problem with Microsoft? Him and most of the computer-using world since, what, the late 1980s? Good luck presenting something on any topic without your biases sneaking in. That's life, you do your best anyway. Doesn't mean the GUI couldn't be better.

-

I actually do this. My Dad hates having his desktop cluttered, so he showed me how to do it. One other note on this subject-- It's ergonomically awful-- involves clicking a tiny thing-- but if you hide your desktop items these double-arrows will still give you access to the contents of your desktop. I mention this because there's something built into the shell to show the contents of the desktop, and for all I know people making custom GUIs and adjuncts might already make use of that/ be able to make use of that.

-

Yeah, I edited my post to update so I don't spread confusion. Definitely a derp on my part. I had kinda started to draft that post a bit ago, had skimmed page 9 but not read in detail when I posted. Apologies, I know it's infuriating to explain the same thing over and over, and honestly it was entirely because I was being somewhat lazy after a long day. I'd forgotten the bit you said about doing a lot with one hand and more with two. I might quibble a bit with the model, which is one reason I don't think it resonated-- going back to the video game control scheme analogy, I see the best scheme as one where the two work synergystically. HOWEVER-- if you wanted to realize something closer to your model than my discussion of how to extract the most data from mouse-use alone DEFINITELY applies, it's just that the left hand keyboard inputs I proposed would be de-emphasized or reworked to be less necessary at each stage. Sorry again about introducing confusion.

-

EDIT-- Okay, I drafted this a bit last night, so I'm gonna make a small edit. If I understand correctly from Ross's most recent posts, it is *indeed*, as I originally surmised from the video, that the act of switching between the keyboard and the mouse for a control scheme is the fundamental problem? In other words, it's not that everything must be done with the mouse, it's just that using the full keyboard slows things down. I'd say video modern game designers generally agree. Hence why my proposal was to take a page from video games and make a hybrid using only hotkeys that are within easy reach of the w-a-s-d gaming position (though I realize I kinda buried that info in the design document, so it may have looked like I was advocating any old hotkeys, full stop). After all, it's not like you ever end up using two mice at a time, so otherwise your left hand is just kinda hanging out? And I figure most people who play games should be pretty comfortable with the layout, though-- that may admittedly not include the kind of games Ross tends to play. In terms of maximizing the power of the mouse, and certainly if anyone was seriously entertaining driving only with the mouse-- I mean, optimally, to be honest, I actually WOULD like to use a mouse with more buttons on it. I know that was a bit of a joke in the video, but IRL my mouse is an MMO mouse with 12 re-mappable side-buttons explicitly so I can do more things with my mouse. I think the radial menu idea has merit, but I think that stacking of nodes becomes a problem (I have something like it in my own mock up and it's honestly the part of it I like least). The first thing I ever saw with a radial menu almost exactly like the one Ross mocked up was actually "The Sims", and even in that context, it could get overwhelming with a mere 12 options in practice. You could alleviate that by having the first tier of options act like "folders" with a second tier of objects below that being more "file" level options that fit inside them, and come up when you mouse over a "folder". On some level there's almost an information-theory problem underlying trying to get too much from too little with the mouse-- you have to be able to match the level of information provided by the mouse's actions to the number of things you want to be able to specify with it (Eh-- roughly. I could complicate that but let's not). Basic stuff, but I think framing it that way kinda clarifies the challenge? You can make the target much softer by manually creating a subgroup-- EG, from the example above, CONSTRUCTING a folder that you put specific files or programs in to invoke. Put another way, it's easier to use a mouse to specify one of 12 hand-picked things, rather than find that same item among a file-system of thousands of things organized in varying ways. But the hand-crafting solution seems like it would be inherently impossible to take to scale, and probably couldn't allow full control of a system in its own right. I feel like gestures or fighting-game-esque combinations of buttons are really your best bet at approximating full control, from a purely theoretical perspective (A multi-button mouse truly COULD unite a lot of this control on the mouse. Because maximum number of unique invocable files or programs is predicted by X^N where X is the number of buttons on the mouse and N is the number of keys pressed in a combination. My mouse has a total of 16 buttons not counting the dpi and mode switches. Get 3 or 4 buttons deep and that's a serious amount of options.). That is, I can imagine those generating the most bang-for-the-buck in terms of simple solutions that can specify one item among hundreds or 1000s in relatively short time-scales. Not to let air out of the balloon on gestures, but in practice I really dislike them. I do have a small proposed fix for them, but I'll start with my gripes. As an early adopter of gestures, and tried, I believe, both the old-school opera gestures with the pop-up guide, and some kind of gesture plugin for Firefox, and used both for quite some time (I was/am a bit of a browser enthusiast). For simple things they were alright, for more complex things I just found myself preferring to do it the old way. In part it was that there was a not-insubstantial error rate. It can be harder than it looks to programmatically identify even a simple shape once you get up to 3 strokes, and the problem worsens the more gestures there are because there are only so many ways to arrange 3 strokes-- certainly when all the lines have to be contiguous. Throw in curved strokes-- given that it's quite hard actually to draw a perfect line with the mouse-- and the problem becomes even harder. THAT SAID-- I do have one thought on making them more workable, which is you could bring up a phone-password-style-grid when you press the gesture hotkey. That would at least solve the problem of gestures being hard for the computer to interpret, and greatly expand the number of unique invocations possible depending on the number of pegs in the grid-- though you'd still need to memorize the symbols, of course.

-

Collapsar77 started following THE GUI SHOULD BE BETTER

-

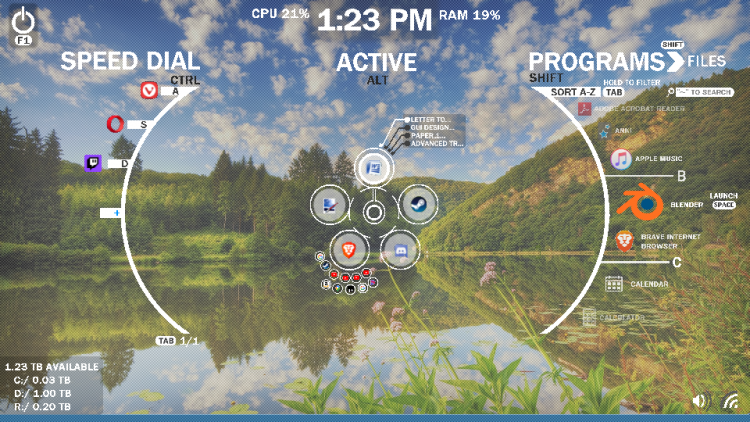

Not sure what to do about the windows themselves, but I have some thoughts about some other parts of the GUI. I've enclosed more detail on how to navigate the screens, but I think/hope it should be relatively self-explanatory. The only bit that is certainly not immediately obvious is that the "control surface"-- the full-screen interface for the computer that appears as an overlay on the desktop-- would be accessed by remapping alt+tab, using the windows key, or pressing on the thin taskbar seen on the bottom. Any other details you may need should be included in the attached .txt. You can ask me lingering questions here, and I'll get to them when I get to them. Apologies for the crude visuals-- these are mock-ups, merely intended to get the idea across. In practice, the specific implementation could vary considerably. GUI Design Documentation.txt

×

- Create New...

This website uses cookies, as do most websites since the 90s. By using this site, you consent to cookies. We have to say this or we get in trouble. Learn more.